The Architecture Behind Agentic AI That Thinks and Acts

From One-Shot Responses to Agentic Systems

AI is evolving beyond just answering questions. Traditional language models like ChatGPT and Claude work well for single-turn responses. Ask a question, get an answer. But most real-world problems aren’t one-and-done. They involve multiple steps, tools, validations, and shifting context.

That’s where agentic systems come in.

Unlike one-shot models, agentic AI can break down complex tasks, plan actions, call tools, reflect on what’s working, and adapt when it’s not. Companies like OpenAI, Google, and DeepMind are already building toward this architecture, betting on agents as the future of intelligent automation.

“A solid system also needs reliable memory management (both short- and long-term), a robust tool execution framework, feedback and correction mechanisms. Without those, it’s not really an agent, it’s just a chat interface with extra steps.” – David Alami, PhD in Technical Sciences and AI Engineer at Busy Rebel

This article explores why the shift from reactive to agentic systems matters and what’s under the hood. We’ll break down the architectural components, how memory and execution really work, and what expert insights reveal about building production-grade agents. Agentic AI isn’t just a framework, it’s a paradigm shift for the next era of software.

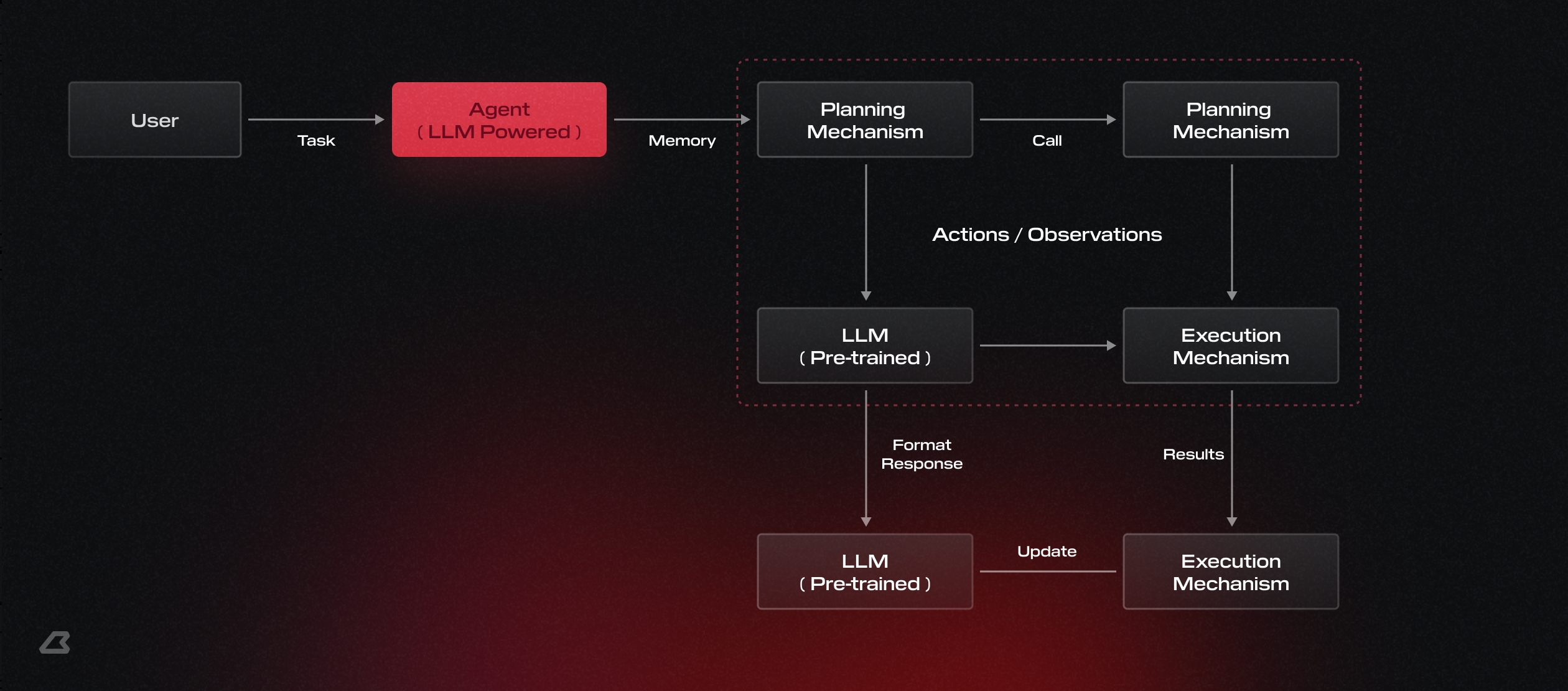

Core Architecture of Agentic AI Systems

What separates real agents from clever prompts? Architecture. Agentic systems only work when each layer – planning, execution, memory, feedback, knows its job and plays it well. It’s built on a robust, modular structure. Each part of the system has to handle a specific job: reasoning, planning, action, and feedback. Without a clear separation of concerns, things break fast.

In production-grade setups, the planner acts like a dynamic roadmap. It breaks a user’s request into logical steps and sequences the work. The execution engine picks up these steps, runs API calls, performs code execution, or queries databases. If something fails, like a null result or tool error, the system doesn’t freeze. Instead, it re-evaluates the context, revises the plan, and continues.

Memory is a key piece of the puzzle. Our expert notes that short-term context is usually held in-memory (or Redis) for quick session-level recall, while long-term memory lives in vector databases like Pinecone, where embeddings can be retrieved semantically across time. The right memory strategy helps prevent agents from reusing outdated information or injecting irrelevant facts into decision-making.

Tool usage is not hard-coded. Agents typically map task intent to tool specifications dynamically, often using structured formats like JSON. The LLM then uses these inputs to decide which tool to call and how to structure the output. This level of flexibility makes agentic systems useful for workflows that require chaining, not just single-turn logic.

The entire flow relies on a feedback loop. The execution engine reports back status updates; the planner adjusts. This isn’t a brittle script. It’s a living system that adapts in real time.

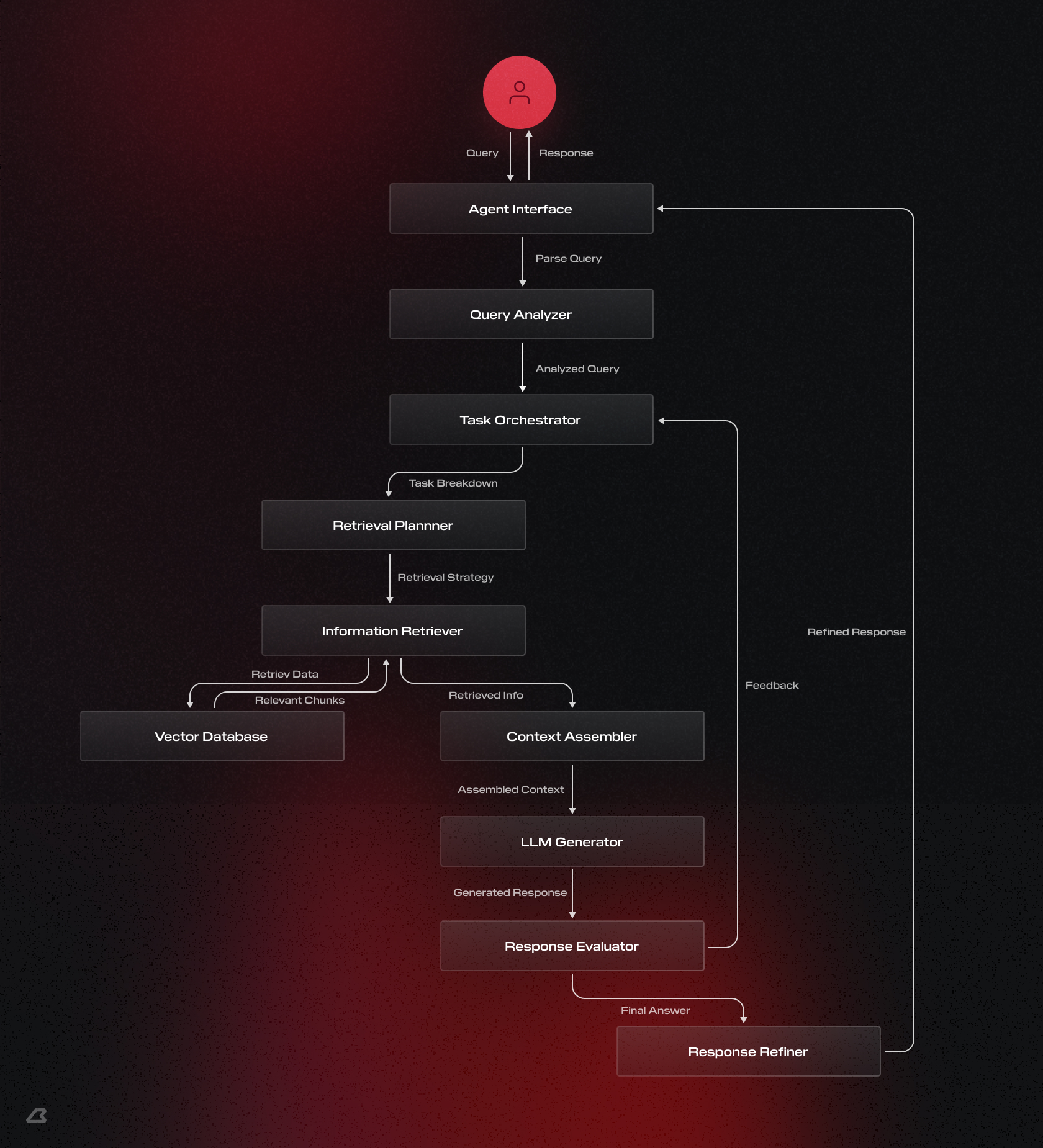

System Diagram: Agent

This kind of loop-based, stateful logic is already being implemented in production using state graph orchestration frameworks and multi-agent toolchains.

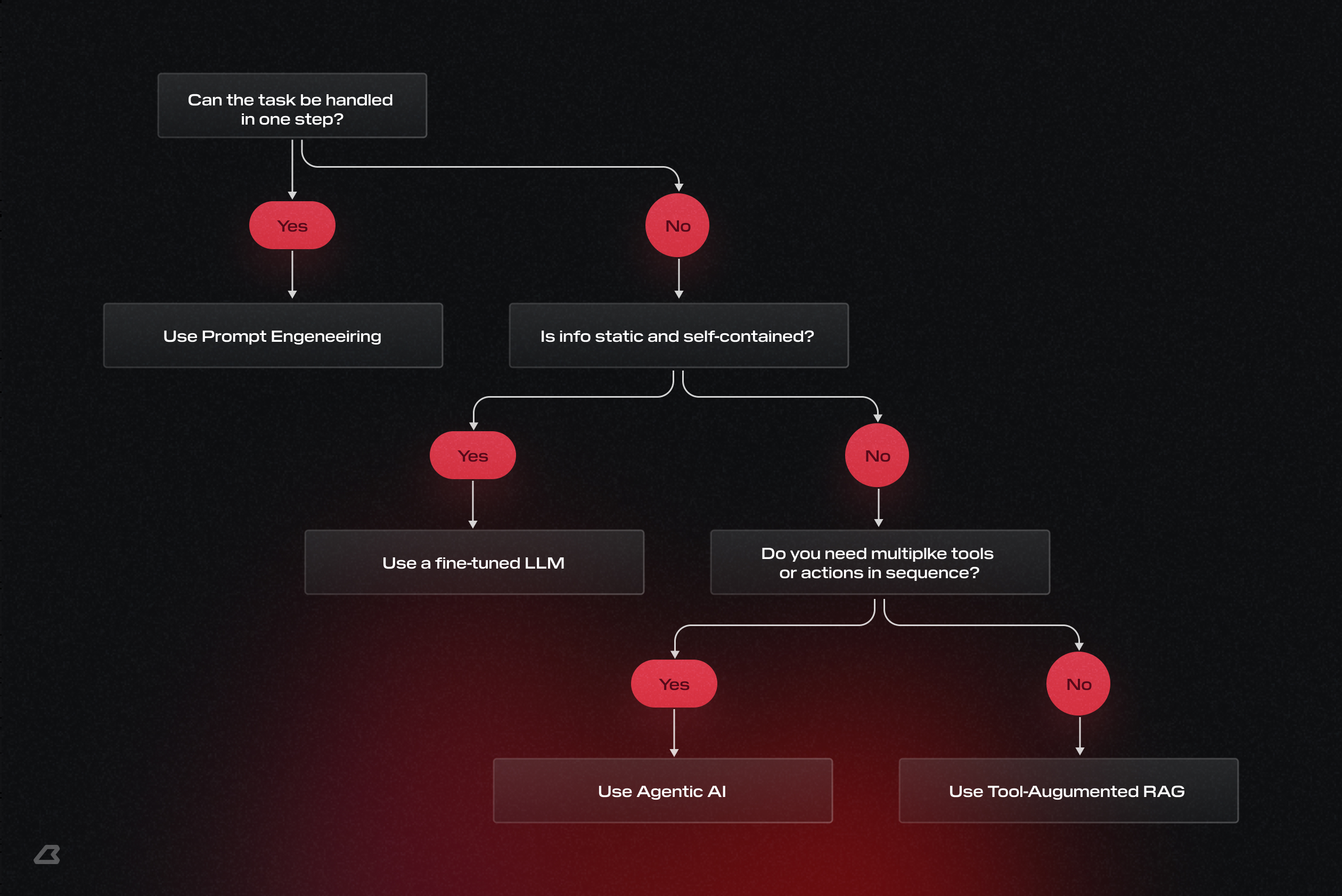

When to Use Agentic AI (vs Prompting or RAG)

Not every problem needs an agent. Overbuild, and you’ll end up solving one-step tasks with a full-blown stack – slow, expensive, and unnecessary.

To help teams evaluate where agentic logic makes sense, it’s useful to frame the decision based on three real-world conditions:

- If the task is simple, self-contained, and requires no external actions – a direct prompt is enough.

- If you need to ground answers in external documents or sources – RAG will usually do the job.

- But when execution matters, when the task needs reasoning, tools, retries, or multiple steps – that’s where agentic AI becomes necessary.

As our technical expert at Busy Rebel notes, this transition usually happens when systems need “decision-awareness,” not just “language awareness.” That includes cases like customer support automation, data processing workflows, or assistant-style operations where the model has to reason over state, take action, and follow up.

This logic tree can help teams evaluate based on real architectural trade-offs: control, cost, tool orchestration, and performance constraints. In practice, agentic AI becomes essential when single-shot or retrieval-only methods hit a wall.

Real-Time Learning and Execution Flow

Once an agent is in the loop, it has to do more than just execute. It has to respond to reality.

Agentic systems operate in unpredictable environments. APIs fail, tools return null results, context changes mid-task. Static logic won’t cut it. That’s why agents follow an execution loop that allows them to adapt in real time.

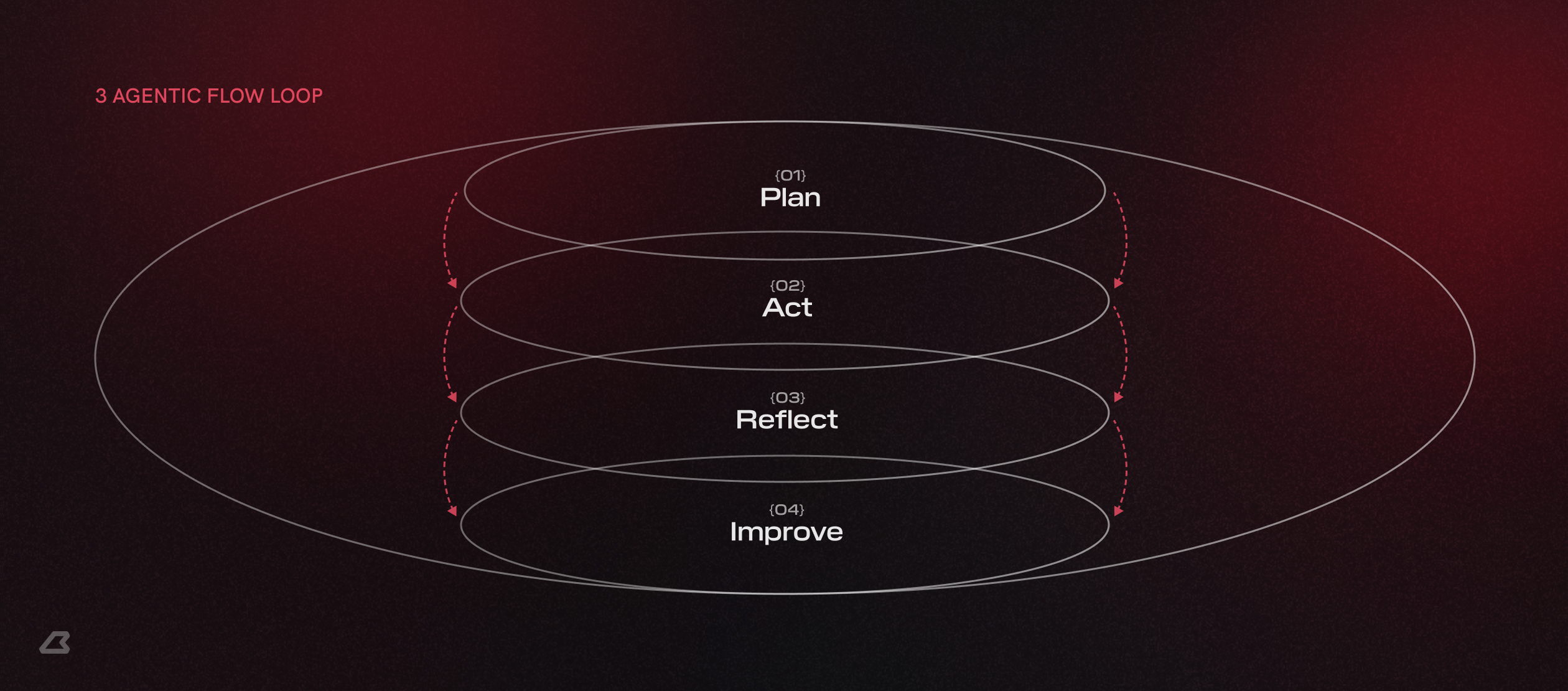

Each stage feeds into the next. The agent generates a plan, takes action, observes the outcome, and if something breaks, it doesn’t stop. It revises the plan and tries again. It’s not magic. It’s structured iteration.

Our expert highlights that these feedback cycles are what allow agents to recover mid-process. Instead of halting, they replan with updated inputs: swapping tools, tweaking parameters, or even changing strategy altogethe. That’s what makes agentic logic resilient and production-ready.

This loop isn’t just theory. It’s being codified in agentic stacks from leading teams. Infrastructure choices today are shaped around this need for continuous reflection and response.

What to Build On: Infrastructure for Agentic Systems

A working agent is just the tip of the stack. Under the hood, you need fast context stores, reliable planners, fault-tolerant execution chains, and glue to hold it together.

While the surface might look like “just a smart assistant,” the backend includes:

- A coordination layer for managing planner–executor interactions

- Fast-access memory (Redis, in-memory cache) for short-term state

- Vector databases for long-term semantic memory

- Tool routers and execution backends with error tracking

- Event logs for reasoning replay and failure analysis

The architecture you choose depends on how dynamic your agents are. Stateless agent flows can run in serverless environments. But if your agent replans, caches memory, or manages multi-turn flows – you’ll likely need persistent memory stores, queueing systems, and observability tools.

Our expert emphasizes that building production-grade agentic systems means thinking beyond LLMs. Execution, not just generation, becomes the limiting factor and that’s where infrastructure either scales or breaks.Recent agentic infrastructure releases reflect how leading platforms are rethinking architecture around autonomous, feedback-driven agents.

This architecture outlines how components like planners, retrievers, evaluators, and memory layers interact in a real-world agentic RAG system, turning a user query into a multi-stage reasoning and response flow.

This builds on ideas we covered in our deep dive on Agentic RAG, where we explored how retrieval and reasoning can be combined inside agent workflows.

Final Thoughts: Agentic AI Isn’t a Buzzword – It’s a Shift

Agents aren’t a feature. They’re a shift. When systems begin to plan, retry, and improve, we stop thinking in prompts and start thinking in loops.

Instead of stateless text generators, we’re building systems that plan, act, reflect, and adapt. That has architectural consequences: tool-aware execution layers, memory strategies, and feedback loops become core infrastructure, not optional add-ons.

For teams building AI systems that do, not just say, this evolution isn’t optional. Agentic logic makes autonomous workflows viable, and it’s already reshaping how platforms approach scalability, stability, and relevance.

As David Alami, PhD and AI Engineer at Busy Rebel, puts it:

“ It’s already clear that for anything beyond simple Q&A, agentic approaches are more scalable and flexible. As tools get better described interfaces and LLMs are becoming more intelligent, this will likely become the standard architecture. “

The future of AI agents isn’t hypothetical, it’s operational. The systems that win will be the ones that can reason, act, recover, and improve on their own.